- The AI Citizen

- Posts

- Top AI & Tech News (Through October 26th)

Top AI & Tech News (Through October 26th)

ASI Ban 🚫 | No Jobs 📉 | AI News Assistants 📰

Hello AI Citizens,

Should we ban artificial superintelligence or build it under tight control? A ban could buy time to improve alignment and prevent irreversible harm, but it may also push work underground, entrench a few state actors, and stall life-saving breakthroughs. The middle path is “constrained progress”: allow narrowly scoped advances while putting hard brakes on open-ended autonomy and self-improvement. For CAIOs, that means capability thresholds (what your systems may and may not do), independent red-teaming and evals before each scale jump, and compute governance (caps, audit trails, and kill-switches tied to risk). It also means tiered deployment (sandbox → limited pilot → guarded production), strict human-in-the-loop for real-world actions, incident reporting, and strong provenance: opt-in data only, watermarks/traceability on outputs, and clear user consent. Align incentives by funding a safety budget separate from product, tying leadership comp to safety KPIs, and inviting external auditors and community boards into the process. In short: don’t race to ASI—raise the bar; make each step legible, testable, and reversible.

Here are the key headlines shaping the AI & tech landscape:

Open Letter Calls for a Ban on Superintelligent AI Development

Jobless Growth’ Takes Hold: Productivity Up, Hiring Down

Reddit Sues Perplexity Over Alleged Scraping of User Posts

Google Research’s “Magic Cycle”: Cancer AI, Quantum Milestone, and Earth AI

Amazon Debuts AI Smart Delivery Glasses for Last-Mile Delivery

AI Assistants Flub News 45% of the Time, Global BBC/EBU Study Finds

Let’s recap!

Open Letter Calls for a Ban on Superintelligent AI Development

More than 700 signatories —including Nobel laureates, AI pioneers, Steve Wozniak, faith leaders, and public figures— backed a Future of Life Institute letter urging a prohibition on building “superintelligence” until it’s provably safe and has broad public buy-in. The letter defines superintelligence as AI that surpasses humans on all useful tasks and warns it could be as little as 1–2 years away. Polling released alongside the letter says 64% of Americans don’t want superintelligence developed until it’s proven safe, while only 5% want maximum speed. The campaign spotlights a widening gap between big-tech roadmaps and public comfort levels. Source: TIME (Oct 22, 2025).

💡 Expect louder calls for rules and potential pauses on frontier models—have a public, simple policy on what you will and won’t build. Focus near-term value (assistive AI, copilots, analytics) and publish easy-to-read safety notes: how you test, who approves launches, and how people can report issues. Add clear red lines (no autonomous decision-making on high-risk tasks without human sign-off) and contingency plans (kill switches, incident playbooks). Engage employees and customers: short FAQs, open forums, and opt-outs build trust while regulators figure out the big stuff.

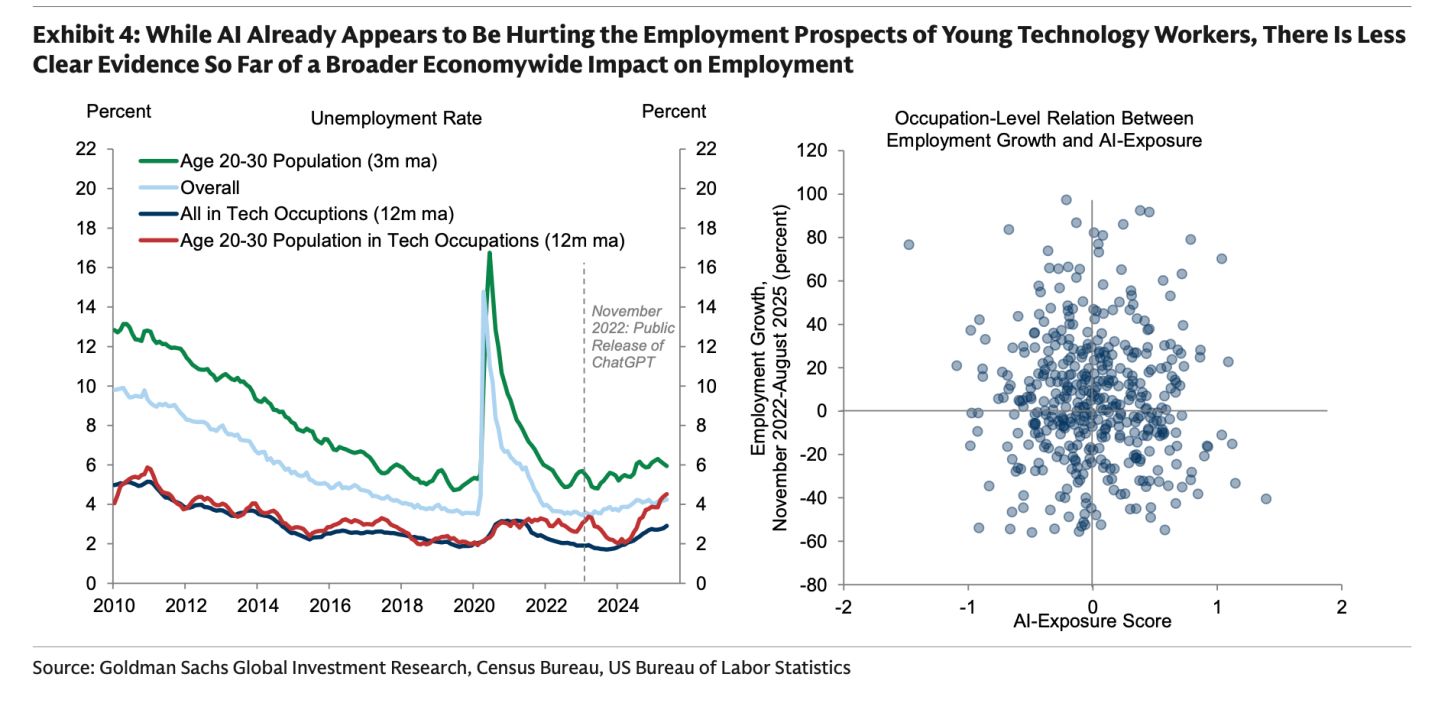

Jobless Growth Takes Hold: Productivity Up, Hiring Down

Goldman Sachs says the U.S. may be entering a “low-hire, low-fire” era where GDP grows on AI-driven productivity, but net hiring (outside healthcare) stays weak. Fed officials echo the softness, and Goldman warns the bigger labor impacts of AI often show up in recessions when firms restructure. Early signs: entry-level and young tech workers in automation-exposed roles are feeling the pinch. Source: Fortune

💡Use AI to augment, not just replace. Stand up reskilling tracks and protected “apprentice” pipelines so juniors still get real work. Track value with people-friendly KPIs (cycle time, error rate, customer NPS) rather than headcount cuts. Add “no-regret” guardrails: change-impact reviews before automations go live, human-in-the-loop on high-stakes tasks, and a recession playbook that favors redeploy/upsell over RIFs.

Reddit Sues Perplexity Over Alleged Scraping of User Posts

Reddit filed a lawsuit accusing AI search startup Perplexity of illegally scraping copyrighted Reddit content via third parties (Oxylabs, AWMProxy, SerpApi) to train or power its service. Reddit says its posts are now heavily cited by Perplexity and argues AI firms are fueling an “industrial-scale data laundering” economy, while Perplexity denies training on Reddit data and calls the suit an attempt to force licensing. The case follows Reddit’s similar action against Anthropic and comes as Reddit monetizes data via licenses with OpenAI and Google. Source: CNBC

💡Treat web data as licensed, not “free.” Audit vendors for source provenance, require indemnities, and whitelist only content with clear rights. Build an internal “clean corpus” policy (licenses, opt-ins, public-domain, your own data) and log citations in outputs. Expect rising costs for high-quality human conversation data; budget for licenses or pivot to first-party data collection.

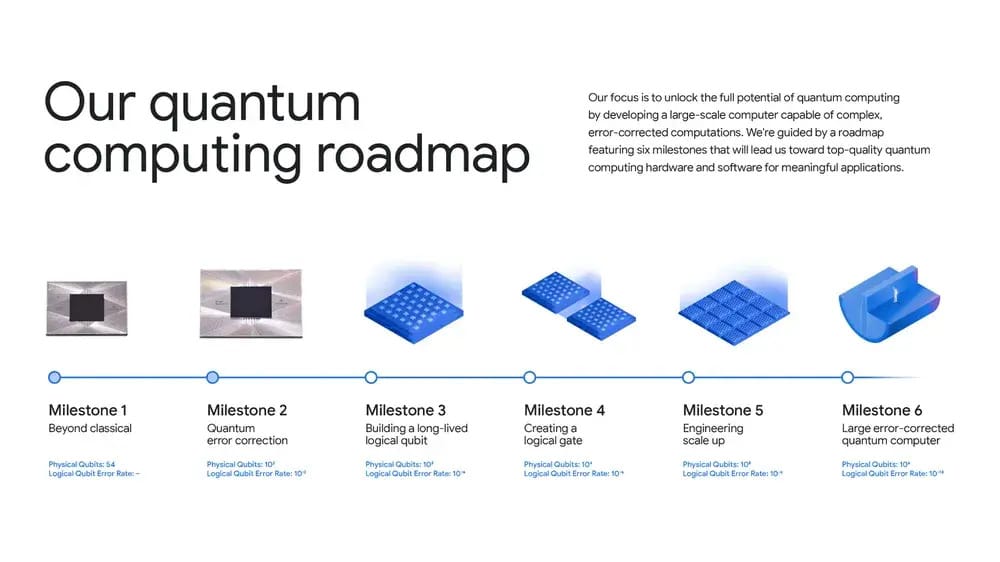

Google Research’s “Magic Cycle”: Cancer AI, Quantum Milestone, and Earth AI

Google packaged a run of breakthroughs under its “magic cycle” idea—foundational research that quickly turns into real-world tools. DeepSomatic helped doctors spot cancer-driving mutations (Children’s Mercy found 10 new variants in pediatric leukemia); Cell2Sentence-Scale 27B generated a lab-validated therapy hypothesis that made cancer cells more visible to the immune system; the Willow chip powered verifiable quantum advantage via the Quantum Echoes algorithm (pointing to future materials/medicine modeling); and Earth AI aims to fuse weather, population, and infrastructure data for richer crisis and climate insights. Sources: Google Keyword

💡 Treat this as a playbook for impact. Pilot cancer- or quality-control-style detection where you have lots of noisy data, try geospatial “whole-picture” dashboards for operations and risk, and keep quantum on your watchlist (start with small proofs-of-concept; don’t move core work yet). Set clear guardrails (data consent, provenance, human review) so wins are safe, explainable, and usable.

Amazon Debuts AI Smart Delivery Glasses for Last-Mile Delivery

Amazon is piloting smart delivery glasses that use on-device computer vision and AI to show drivers hands-free directions, package ID, hazard cues, and proof-of-delivery prompts in a heads-up display. The glasses auto-activate at stops, guide walking routes in complex sites (e.g., apartments), and include a vest-mounted controller, swappable batteries, and an emergency button; future versions aim at real-time defect detection and safety alerts. Source: Amazon News

💡Treat this as a template for frontline AR. Run a small pilot on high-density routes, track safety incidents, misdeliveries, and stop time per package, and compare to phone-based workflows. Build guardrails now: MDM enrollment, camera/privacy notices, data retention limits, and opt-in policies. Start with union/staff reps, offer RX lens options, and budget for spares/swaps so downtime doesn’t spike.

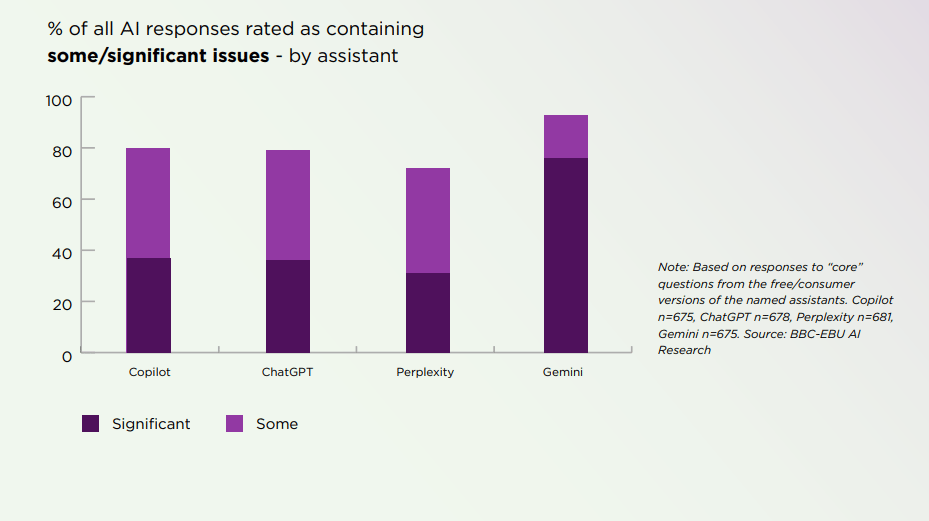

AI Assistants Flub News 45% of the Time, Global BBC/EBU Study Finds

A large cross-border review led by the BBC and coordinated by the EBU tested 3,000+ answers from ChatGPT, Copilot, Gemini, and Perplexity across 18 countries and 14 languages and found systemic problems: 45% of replies had at least one significant issue, 31% had sourcing errors (missing/misleading/incorrect attributions), and 20% contained major inaccuracies or hallucinations. Gemini performed worst, with significant issues in 76% of responses, largely due to poor sourcing. With AI assistants already replacing search for some audiences (7% of all online news consumers; 15% of under-25s), the study warns of knock-on risks to public trust, volunteer contributions, and funding for original news. The EBU released a “News Integrity in AI Assistants” toolkit and is urging regulators to enforce existing rules on information integrity and media pluralism. Source: BBC / EBU

💡Treat assistant answers like work from a new intern: useful, but always check the sources. Turn on “show citations by default,” block unsourced summaries in user-facing apps, and route sensitive topics to human review. Track a few simple metrics (citation rate, click-through to sources, error rates on sampled answers) and make vendors commit to them in SLAs. Teach staff “trust but verify” habits (open the link; check date and publisher), and add clear labels so customers know when content is AI-summarized.

Sponsored by World AI X |

Celebrating the CAIO Program July 2025 Cohort! Yvonne D. (Senior Manager, Ontario Public Service Leadership, Canada) |

About The AI Citizen Hub - by World AI X

This isn’t just another AI newsletter; it’s an evolving journey into the future. When you subscribe, you're not simply receiving the best weekly dose of AI and tech news, trends, and breakthroughs—you're stepping into a living, breathing entity that grows with every edition. Each week, The AI Citizen evolves, pushing the boundaries of what a newsletter can be, with the ultimate goal of becoming an AI Citizen itself in our visionary World AI Nation.

By subscribing, you’re not just staying informed—you’re joining a movement. Leaders from all sectors are coming together to secure their place in the future. This is your chance to be part of that future, where the next era of leadership and innovation is being shaped.

Join us, and don’t just watch the future unfold—help create it.

For advertising inquiries, feedback, or suggestions, please reach out to us at [email protected].

Reply